Edge Computing + Fall Intern

internship engineering funFall 2017 was my last semester as a Master’s student at Columbia University. Being enrolled for two subjects this semester, I had the time and opportunity to do a part-time fall internship at Max2 Inc, where I worked with the Internet Edge Services Team on edge-computing microservices.

The motivation for this internship was to know about edge computing, a term I had never heard of before. As Wikipedia puts it, edge computing is a method of optimising cloud computing systems by performing data processing at the edge of the network, near the source of the data (see Edge Computing). Max2 Inc, along with Virtuosys are working towards developing an edge computing platform to enhance computing for IoT devices and services. This platform has multiple use cases, such as a smart retail store management system or a smart city solution.

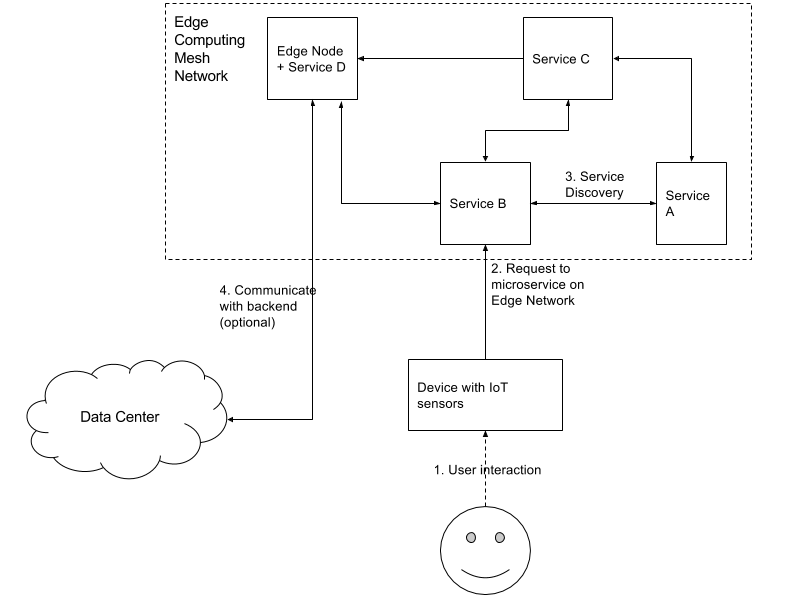

In its simplest form, an edge computing network is a mesh of small physical servers, connected through wireless or wired network, that can be deployed within premises, running various computing services. As these servers have low computing power, the deployed services are designed to perform light tasks, which would otherwise bear a heavy network cost and inefficient use of computing resources, were they performed at a centralized data center. A very simple example can be detecting presence of a customer using a motion sensor and displaying a product advertisement on a nearby screen. Now, instead of sending this simple request all the way up to a data center and fetching the media content from there, we can store the media content as well as run the service right from one of the computing nodes within the edge network, as shown below.

In a typical use case, users interact with an IoT device or sensor, say a motion sensor, which triggers a HTTP request. With edge computing, this request is forwarded to a microservice running within the mesh network on premises. If the request can be completely processed by the microservice running on one of the edge network nodes, it implies a low computing and network cost, thus freeing up the data center computing power and network for heavier tasks. Additionally, it also allows for low latency for a substantial number of requests. As a fall back option, if a user request requires heavy computation or simple backup of requests processed so far is required for administrative purposes, such cases can always be forwarded to the data center.

At internship, I worked on developing RESTful microservices for collecting event based data from weight and motion sensors, camera images and image recognition for a retail store application. The microservices are deployed and replicated as dockerized containers on multiple nodes, for parallelization, load balancing and fault tolernance within the edge network. The deployments are managed through a centralized management server which keeps track of mesh formations and services deployed on each of the edge nodes. While, this was not my first time working on microservices, it was a good experience getting an introduction to edge computing, optimizing microservices developed for IoT applications and learning about docker orchestration, alongside one hectic semester. :octocat: